In this week's Abundance Insider: AR-aided surgeries, remote human brain-to-brain collaboration, and a new flu-targeting antibody.

P.S. Send any tips to our team by clicking here, and send your friends and family to this link to subscribe to Abundance Insider.

P.P.S. Want to learn more about exponential technologies and home in on your MTP/ Moonshot? Abundance Digital, a Singularity University Program, includes 100+ hours of coursework and video archives for entrepreneurs like you. Keep up to date on exponential news and get feedback on your boldest ideas from an experienced, supportive community. Click here to learn more and sign up.

Share Abundance Insider on LinkedIn | Share on Facebook | Share on Twitter.

MediView XR raises $4.5 million to give surgeons X-ray vision with AR.

What it is: MediView XR recently raised US$4.5 million to further develop its Extended Reality Surgical Navigation system. Accessed through the Microsoft Hololens, MediView’s product grants surgeons a form of “x-ray vision” when conducting cancer ablations and biopsies. The system generates a personalized 3D holographic model for each patient based on CT and MRI scans. Next, ultrasound imaging updates the holographic display throughout the procedure. This process not only mitigates harmful x-ray radiation used in standard procedures today, but also improves visual acuity by translating 2D data into three dimensions. Surgeons can even rotate around the body while AR-overlaid visuals remain accurately mapped to the patient. Meanwhile, hand-tracking and voice commands allow surgeons to access any needed information on the spot. In its first set of human trials, MediView has already used its system on five live tumor patients and began a nine-patient trial in August. Leveraging its newly acquired capital, the company further aims to achieve FDA approval by 2021.

Why it’s important: Surgeons around the world are forced to make sense of 2D images for 3D applications. MediView’s technology would eliminate this hurdle and reduce surgeon error in doing so. Personalized 3D visualizations could also be used to educate patients on their conditions in a more intuitive manner. The educational applications of AR extend to medical schools as well, where mapping real data into practice procedures could boost student engagement and learning. The success of tumor removal surgeries is largely dependent on how precisely surgeons can incise the tumor, ensuring no cancerous traces are left behind. As AR headsets grow increasingly sophisticated, precise 3D models (coupled with biomarkers injected in the bloodstream to mark tumor cells) could vastly improve patient outcomes. MediView’s CEO John Black, who has performed over 2,000 surgeries himself, aims to transform the way surgeons interact with real-time data visualizations.

Engineers develop a new way to remove carbon dioxide from air: The process could work on the gas at any concentrations, from power plant emissions to open air.

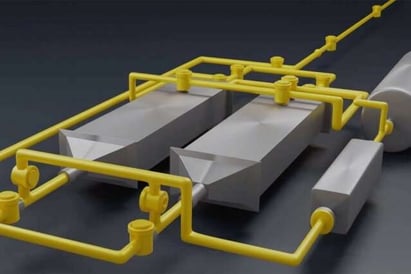

What it is: Scientists from MIT have developed a new method of extracting carbon dioxide from streams of air or feed gas, even at the far lower concentration levels found in the general atmosphere. The technology essentially works like a large battery: charging when CO2-laden gas passes over its polyanthraquinone-coated electrodes, and discharged when it releases a pure stream of carbon dioxide. Unlike some alternatives, the method requires no large pressure differences or chemical processes and can even supply its own power, courtesy of the discharge effect.

What it is: Scientists from MIT have developed a new method of extracting carbon dioxide from streams of air or feed gas, even at the far lower concentration levels found in the general atmosphere. The technology essentially works like a large battery: charging when CO2-laden gas passes over its polyanthraquinone-coated electrodes, and discharged when it releases a pure stream of carbon dioxide. Unlike some alternatives, the method requires no large pressure differences or chemical processes and can even supply its own power, courtesy of the discharge effect.

Why it’s important: Most carbon capture technologies require high concentrations of CO2 to work, or considerable energy inputs, such as high pressure differences or heat to run chemical processes. This device works at room temperature and regular pressure. Furthermore, it can generate both electricity and pure CO2 streams, valuable for a range of agricultural use cases, carbonation in beverages, and various other applications. Of course, the real benefit of scaling such a method involves our battle against climate change, where our ability to scrub the air of carbon dioxide could be a critical step in reversing environmental catastrophe.

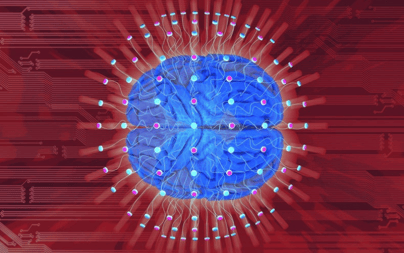

Scientists Demonstrate Direct Brain-to-Brain Communication in Humans.

What it is: For the first time, humans have achieved direct brain-to-brain communication through non-invasive electroencephalographs (EEGs). In a newly published study, three subjects were tasked with orienting a block correctly in a video game. Two subjects in separate rooms were designated as “senders” and could see the block, while the third “receiver” relied solely on sender signals to correctly position the block. EEG signals from the sender brains were converted into magnetic pulses delivered to the receiver via a transcranial magnetic stimulation (TMS) device. If the senders wanted to instruct rotation, for instance, they focused on a high-frequency light flashing, which the receiver would see as a flash of light in her visual field. To stop rotation, senders would focus on a low-frequency light, which the receiver would then interpret as light absence in the set time interval. Using this binary stop/go code, the five groups tested in this “BrainNet” system achieved over 80 percent accuracy in aligning the block.

Why it’s important: A leader in the brain-to-brain communication field, Miguel Nicolelis has previously conducted studies that linked rat brains through implanted electrodes, effectively creating an “organic computer.” The rat brains synchronized electrical activity to the same extent of a single brain, and the super-brain routinely outperformed individual rats in distinguishing two electrical patterns. Building on this research, the leaders of the “BrainNet” human study claim that their non-invasive device could connect a limitless number of individuals. As brain-to-brain signaling grows increasingly complex, human collaboration will reach extraordinary levels, allowing us to uncover novel ideas and thought processes. Rather than building “neural networks” in software, operations like BrainNet are truly linking networks of neurons, creating massive amounts of biological processing power. We are fast approaching the prediction of Nobel Prize-winning physicist Murry Gell-Man, who envisioned “thoughts and feelings would be completely shared with none of the selectivity or deception that language permits.”

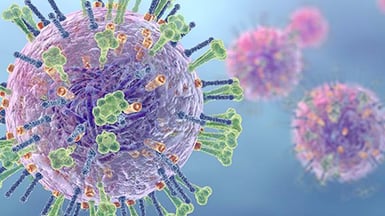

By targeting flu-enabling protein, antibody may protect against wide-ranging strains: The findings could lead to a universal flu vaccine and more effective emergency treatments.

What it is: Scientists recently discovered a new antibody that could tremendously catalyze pursuit of a universal flu vaccine. Experimenting on mice, the research team identified an antibody that binds to the protein nueraminidase, an enzyme essential for the influenza virus’ replication inside the body. While today’s most widely used flu drug, Tamiflu, inactivates neuraminidase, various forms of the latter exist, rendering Tamiflu and similar drugs ineffective for numerous different flu strains. Testing the versatility of their newly discovered antibody, however, the scientists administered lethal doses of different flu strains to a dozen mice, only to find that the new antibody protected all twelve from succumbing to infection.

Why it’s important: Now particularly salient, fighting the flu every season has been an ongoing arms race between humanity and the virus. As strains mutate and develop resistance to our existing medications, the need for alternative strategies has become far more pressing. This new research could accelerate our progress towards finally engineering a cure-all method for preventing and protecting against the flu, saving thousands of lives every year.

Elephants Under Attack Have An Unlikely Ally: Artificial Intelligence.

What it is: Researchers at Cornell University and elsewhere have recently started applying AI algorithms to track and save African Forest Elephants. As Forest Elephants have proven difficult to track visually, Cornell researcher Peter Wrege decided to set up microphones and listen for signs of elephant communication amidst rainforest trees. First, Wrege and his team at the Elephant Listening Project divided the rainforest into 25km2 grids. By then placing audio recorders in every grid square about 23 to 30 feet into the treetops, the team has thus collected hundreds of thousands of hours of jungle sounds—more than any human could possibly tag and make sense of. By then transforming these audio files into spectrograms (visual representations of audio files), the researchers could apply a neural network to the data and isolate sounds from individual elephants. In practice, these algorithmic outcomes are now helping park rangers achieve an accurate census of the population, track elephant movement through the park over time, and even proactively prevent poaching activity in the bush.

What it is: Researchers at Cornell University and elsewhere have recently started applying AI algorithms to track and save African Forest Elephants. As Forest Elephants have proven difficult to track visually, Cornell researcher Peter Wrege decided to set up microphones and listen for signs of elephant communication amidst rainforest trees. First, Wrege and his team at the Elephant Listening Project divided the rainforest into 25km2 grids. By then placing audio recorders in every grid square about 23 to 30 feet into the treetops, the team has thus collected hundreds of thousands of hours of jungle sounds—more than any human could possibly tag and make sense of. By then transforming these audio files into spectrograms (visual representations of audio files), the researchers could apply a neural network to the data and isolate sounds from individual elephants. In practice, these algorithmic outcomes are now helping park rangers achieve an accurate census of the population, track elephant movement through the park over time, and even proactively prevent poaching activity in the bush.

Why it’s important: AI has now been heavily applied to narrow (and growing) use cases across medicine, financial projecting, logistics, industrial design, navigation, and almost any mechanical or logic-based system you can think of. Yet today, it increasingly stands to help us understand unstructured environments and even animal-to-animal communication. Thanks to a convergence of computing power, sensors, and connectivity, methods such as that used by the Elephant Listening Project are now granting us a better understanding of extraordinarily complex natural ecosystems and species, and could aid in our pursuit to protect them.

First Look: Uber Unveils New Design For Uber Eats Delivery Drone.

What it is: Uber Eats and Uber Elevate will soon be delivering dinner for two via drone starting next summer in San Diego. Unveiled at last week’s Forbes Under 30 Summit in Detroit, the delivery drone design features six rotors, rotating wings, and can carry a meal for two in its body. While the drone’s ideal trip time remains relatively short at eight minutes (including loading and unloading), the drone is capable of up to an 18-mile trip, divided into three six-mile legs (from launch to restaurant, to customer, and back to launch area). The current plan involves flying from restaurants to a staging location, at which an Uber driver would then travel the last mile for hand-off to the consumer. Yet with an eye to the future of automated last-mile delivery, Uber is also considering landing drones on the roofs of delivery cars.

What it is: Uber Eats and Uber Elevate will soon be delivering dinner for two via drone starting next summer in San Diego. Unveiled at last week’s Forbes Under 30 Summit in Detroit, the delivery drone design features six rotors, rotating wings, and can carry a meal for two in its body. While the drone’s ideal trip time remains relatively short at eight minutes (including loading and unloading), the drone is capable of up to an 18-mile trip, divided into three six-mile legs (from launch to restaurant, to customer, and back to launch area). The current plan involves flying from restaurants to a staging location, at which an Uber driver would then travel the last mile for hand-off to the consumer. Yet with an eye to the future of automated last-mile delivery, Uber is also considering landing drones on the roofs of delivery cars.

Why it’s important: Less than a year away from Uber Eats’ expected launch in San Diego airspace, we will soon begin to witness the commercialization of autonomous drones in everything from last-mile delivery to humanitarian aid. Not only are these trends slated to displace a significant percentage of cargo-related transit but will fundamentally alter our urban networks and the way tomorrow’s businesses deliver personalized services.

Want more conversations like this?

Abundance 360 is a curated global community of 360 entrepreneurs, executives, and investors committed to understanding and leveraging exponential technologies to transform their businesses. A 3-day mastermind at the start of each year gives members information, insights and implementation tools to learn what technologies are going from deceptive to disruptive and are converging to create new business opportunities. To learn more and apply, visit A360.com.

Abundance Digital, a Singularity University program, is an online educational portal and community of abundance-minded entrepreneurs. You’ll find weekly video updates from Peter, a curated news feed of exponential news, and a place to share your bold ideas. Click here to learn more and sign up.

Know someone who would benefit from getting Abundance Insider? Send them to this link to sign up.

(*Both Abundance 360 and Abundance Digital are Singularity University programs.)